Several of our customers have asked Pindrop if our recently launched deepfake detection product, Pindrop® Pulse, can detect synthetic content generated by OpenAI’s1 new Voice Engine. Pindrop has now responded by becoming the industry’s first deepfake detection engine to announce 98.23% accuracy for OpenAI’s Voice Engine 2022 using a dataset of 10,000 samples from OpenAI’s available text-to-speech engine. This demonstrates that deepfake detection, combined with Pindrop’s voice analysis and a multi-factor approach continues to be a robust authentication strategy. We encourage OpenAI to release a representative dataset of its Voice Engine 2024 to allow Pindrop to extend our benchmarking to the latest release and provide clarity to the industry that has invested hundreds of millions of dollars in building robust multi-factor authentication systems using voice biometrics.

Understanding OpenAI’s Voice Engine Announcement

On March 29, 2024, OpenAI published a blog post on Navigating the Challenges and Opportunities of Synthetic Voices. In this write up, OpenAI included 17 audio samples of their text-to-speech (TTS) model called Voice Engine. Their recommendation is to phase out voice-based authentication as a security measure for accessing bank accounts and other sensitive information.

As researchers, when we run an experiment or analyze data, we need a large set of data to support conclusive (i.e., statistically “significant”) findings. No matter what you’re studying, the process for evaluating significance is the same – you start by stating a null hypothesis (i.e., the thing that you’re trying to disprove) and collect a sample set of data to test the hypothesis. Since OpenAI only released 17 audio samples, the 2024 dataset is not representative enough to make a statistically conclusive determination. Therefore, for our research we focused on OpenAI’s first TTS engine that was released in 2022. This release included 2 TTS engines – tts-1 and tts-1-hd and allowed users to generate synthetic content from six voices pre-created by OpenAI. On March 29, 2024, OpenAI announced2 the ability to generate synthetic content by cloning the voice sample provided by the end-user. OpenAI did not clarify if the 2024 release uses the same TTS engine as the 2022 release or if it reuses some of the underlying components. If the 2024 release uses the same TTS engine as the 2022 release or reuses its components, then Pindrop expects that its accuracy for the 2024 release will be similar to the accuracy for the 2022 release without any additional adaptation.

Pindrop® Pulse’s detection accuracy of OpenAI’s Voice Engine

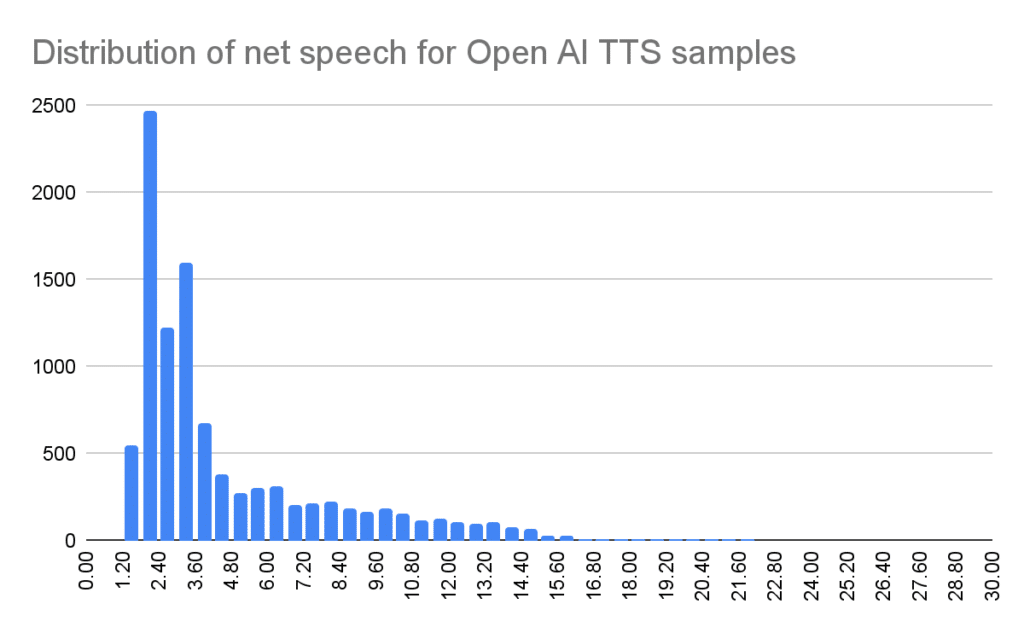

Pindrop’s researchers generated 10,000 audio samples using OpenAI 2022 TTS Voice Engine’s six pre-made voices with both the models provided by OpenAI – the standard “tts-1” model and the “tts-1-hd” model. Pindrop’s dataset of 10,000 generated audio samples has an average duration of 4.74 seconds of net speech per sample. The figure below plots the distribution of the net speech.

We analyzed this dataset using Pindrop® Pulse3 deepfake detection system. Using the default thresholds, Pulse correctly detected 98.23% of the deepfakes, and mistakenly labeled only 0.14% of audio samples as “not synthetic” (i.e., false negative), while the remaining 1.63% were deemed inconclusive. We also tested the 17 samples from OpenAI’s Voice Engine 2024. Pulse performed at a similar level of accuracy. The OpenAI dataset included utterances in multiple languages and our product’s accuracy was robust across these languages. It’s important to note that these results were based on the Pulse model never being trained on OpenAI’s 2022 or 2024 Voice Engines. We expect our product’s accuracy to improve beyond 99% once we adapt our model on a larger dataset from these engines, based on our experience with other new models (e.g., Meta Voicebox4).

We went one step further and analyzed the audio from the OpenAI dataset to try to deduce the possible subcomponents used in OpenAI’s TTS system. Our analysis suggests that it is likely composed of an XTTS variant (e.g. XTTS v15 or XTTS v26) and a GAN-based non-autoregressive neural vocoder (e.g., HiFi-GAN or Multiband Mel-GAN) with a likelihood of 75%. While OpenAI does not provide details about what TTS architecture they used, this additional analysis can further provide insights into further improving the Pindrop Pulse liveness detection system.

Needless to say, we were expecting these results. The reason is that Pindrop® Pulse has been tested against 350+ TTS engines to date. These include several of the leading voice cloning systems, some of which arguably generate far more realistic output than OpenAI Voice Engine. In fact, Pindrop has partnered with Respeecher7, an Emmy award winning, AI voice cloning technology, to help ensure Pindrop Pulse is continuously tested against the industry’s most sophisticated voice clones. We are expanding these partnerships with several other leading TTS engines as well.

Recommending an even better approach to Voice Engine safety

In its March 29th blog8, OpenAI has a section on “building Voice Engine safely” emphasizing its AI safety principles. We appreciate OpenAI’s commitment to AI safety by not releasing their new text-to-speech engine. Additionally, OpenAI has used recommended usage policies (e.g., explicit informed consent on cloning, explicit disclosure of synthetically generated media, etc.).

Pindrop has 2 suggestions for OpenAI to consider to further improve its AI safety stance:

- We encourage OpenAI to release 2024 Voice Engine dataset for Pindrop’s technology to analyze to help the industry make their own data-based decision on how to fortify their defenses against deepfakes from OpenAI’s Voice Engine.

- We applaud OpenAI’s emphasis on watermarking. But it’s important to remember that watermarking is neither sufficient nor robust9. The research community has demonstrated its vulnerability10. Pindrop believes that it is preferable to combine watermarks with liveness detection11 and invites OpenAI to partner with Pindrop to strengthen the ability of Good AI to fight the bad actors using Gen AI.

What does this mean for customers using or exploring voice biometrics

Audio generated by high-end TTS systems can fool some voice authentication systems. Therefore, authentication systems need to have the capability to detect all types of AI generated audio-modulation, synthetic voices, voice clones, and replays of voices. This was our main motivation to launch Pindrop® Pulse as an integrated capability of our multi-factor authentication platform Pindrop® Passport. If you have doubts that your authentication system has strong deepfake detection or not, contact Pindrop and test Pindrop® Passport with Pindrop® Pulse against the most sophisticated TTS systems.

Pindrop continues to stand behind our multi-factor authentication platform Pindrop® Passport that includes the new add-on Pindrop® Pulse, which has been trained on a dataset from 350+ TTS systems. Our deepfake engine has demonstrated >95% accuracy12 against leading TTS engines, including the major new ones launched in the last 12 months such as Meta’s VoiceBox, ElevenLabs, PlayHT, Resemble.AI and Vall-E.

How can OpenAI help put this speculation to bed

The OpenAI blog has caused unnecessary fear and panic in the industry. Decisions made in an environment of speculation are usually suboptimal. We encourage OpenAI to help the industry arrive at their own fact based decisions by sharing a representative dataset of synthetic voices. Pindrop has shown the path towards better collaboration between deepfake detection technology providers and voice cloning solution providers7. Pindrop’s continued research investment in deepfake detection will ensure Pindrop® Pulse will continue to safeguard our customers’ investments in voice biometrics and multi-layered authentication systems.

1. The “OpenAI” name, the OpenAI logo, the “ChatGPT” and “GPT” brands, and other OpenAI trademarks, are property of OpenAI. OpenAI as an AI research and deployment company. OpenAI’s mission is to create safe and powerful AI that benefits all of humanity

2. https://openai.com/blog/navigating-the-challenges-and-opportunities-of-synthetic-voices

3. https://www.pindrop.com/products/pindrop-pulse

4. https://www.pindrop.com/blog/exposing-the-truth-about-zero-day-deepfake-attacks-metas-voicebox-case-study

5. https://huggingface.co/coqui/XTTS-v1

6. https://huggingface.co/coqui/XTTS-v2

7. https://www.pindrop.com/blog/pindrop-and-respeecher-join-forces-to-help-keep-voice-cloning-away-from-harmful-uses

8. https://openai.com/blog/navigating-the-challenges-and-opportunities-of-synthetic-voices

9. https://spectrum.ieee.org/meta-ai-watermarks

10. Wired.com: Researchers Tested AI Watermarks—and Broke All of Them, Oct 2023

11. https://www.pindrop.com/blog/does-watermarking-protect-against-deepfake-attacks

12. https://www.pindrop.com/blog/pindrop-pulse-excels-in-an-independent-npr-audio-deepfake-experiment