Voice is a leading input interface for Internet of Things (IoT) devices. This is mainly attributed to the success of automatic speech recognition (ASR), voice biometrics, and natural language processing (NLP). With this progress comes the increased risk of attacks over the voice channel. Attackers are reportedly trying to use diverse voice spoofing techniques to reproduce the voice of the genuine user, and thus get unauthorized access to sensitive information such as bank and insurance accounts.

Voice spoofing techniques include mimicry, speech synthesis, voice conversion and replay attacks. Among them, replay attack is the most accessible one: it does not require any engineering or imitation skills for someone to find a recorded audio or video of the genuine user and replay it. Unfortunately, state-of-the-art voice biometric systems are highly vulnerable to replay attacks with a false acceptance rate reaching 77%.

In a joint effort to foster the work on voice spoofing countermeasures, speech researchers have organized the ASVspoof 2017 challenge [1]. The challenge aims to assess and enhance the security of voice biometrics against wide variety of replay attacks.

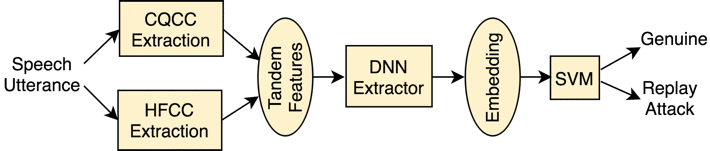

Pindrop researchers took part in ASVspoof 2017 and proposed an efficient system to detect replay attacks. Their work that will be presented at Interspeech 2017 [2] has two main contributions. The first contribution is at the anti-spoofing system front-end, where new low-level features coined “High-Frequency Cepstral Coefficients” (HFCC) are proposed. The second contribution is at the anti-spoofing system back-end level, where a deep neural network extracts high-level features bearing relevant information about channel artifacts present during a replay attack.

The proposed deep features coupled with a discriminative classifier such as support vector machines (SVM) show very high accuracy at detecting replay attacks. The improvement is significant: the error decreases by 53% over the baseline system using constant-Q cepstral coefficients with Gaussian Mixture Models. Furthermore, the proposed system works very well on short speech utterances (below 1 second) and has very low CPU and memory footprint.