Is your voice security stack ready for AI attacks?

Healthcare

fraud is at a

breaking

point.

AI + legacy security = unprecedented fraud.

Legacy security leaves your org vulnerable

Armed with stolen data, AI callers easily bypass KBAs, OTPs, and other legacy security checks—then exploit workflows to change credentials and steal accounts.

Deepfakes and bots steal funds

With access, automation, and synthetic manipulation, attackers drain HSA/FSA funds and reroute benefits—exposing PHI and causing financial losses.

Call times suffer under bot swarms

Automated bots flood contact centers, probing the IVR and monopolizing agents’ time. This surge drives up wait times and blocks legitimate callers.

1210% surge in AI fraud in 2025.3

Our researchers uncovered just how hard AI attacks are hammering healthcare. Discover how these scams are reshaping digital trust.

Defend your real-time voice interactions against AI attacks.

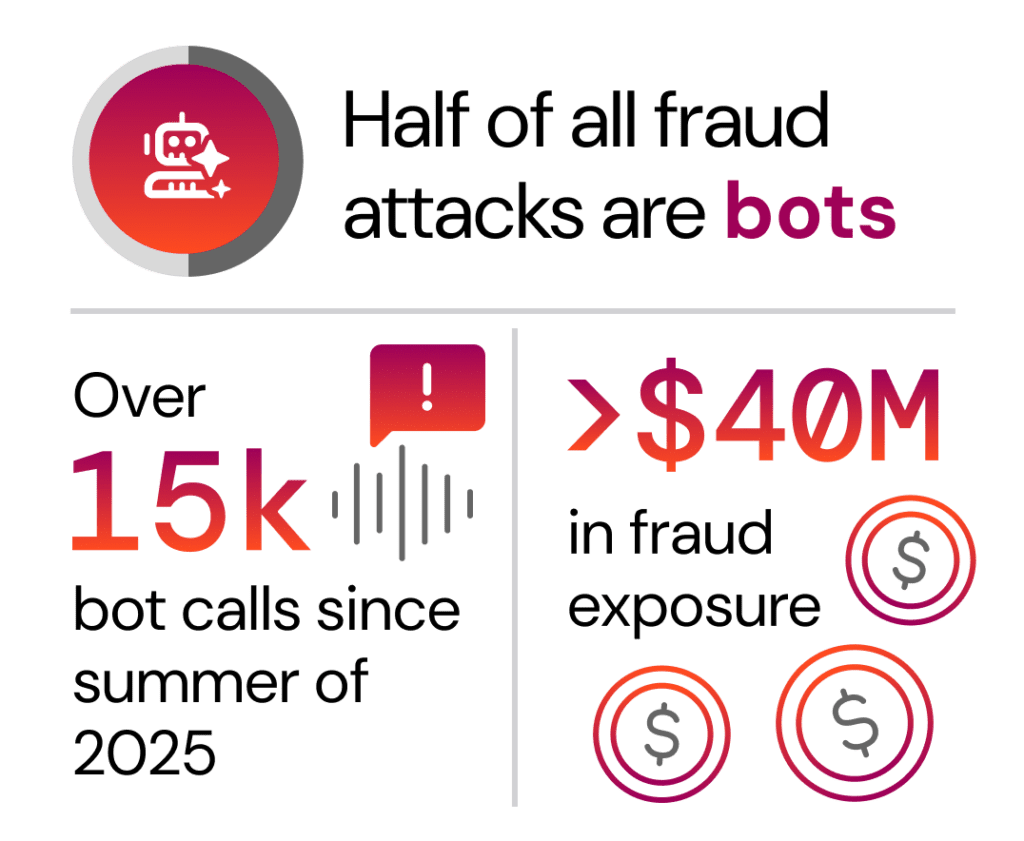

What we saw at a U.S. healthcare provider.4

Bot attacks are rampant. Fraud exposure is high. Defenses are needed to detect attacks in real-time.

Fortify your security.

1 Pindrop, “2025 Voice Intelligence and Security Report,” June 2025.

2 U.S. Office of Health and Human Services, Office of the Inspector General, “2025 National Health Care Fraud Takedown,” 2025.

3 Pindrop analysis of AI fraud data from January-December 2025

4 Anonymous Pindrop healthcare data collected in 2025