Article

Beyond Voice and Video: Tackling the Rise of Deepfake Text

Kevin Stowe

Research Scientist • November 25, 2025 (UPDATED ON December 1, 2025)

5 minutes read time

Deepfake technology is becoming ever more prevalent, and the risks are wide-ranging. Threats in retail scenarios, impersonations of world leaders, and fraudulent job interviews are just a few of the myriad ways attackers can exploit deepfake technology. These attacks typically target two areas: voice, where AI models generate or alter audio to mislead people, and video, where deepfake technology changes an attacker’s appearance or creates an entirely synthetic identity.

However, there is a rising threat of using modern generative text models to create deepfake text attacks, exposing another area of potential risk.

What is deepfake text detection, and why does it matter?

Deepfake text (i.e., AI-generated or AI-altered writing that falsely claims a human as author) is emerging as a subtle but significant threat. Because large language models (LLMs) now produce fluent, context-aware prose, malicious actors can use them to generate fake news articles, impersonate experts, spam disinformation, or forge written endorsements and statements.

The danger is that many readers won’t detect the deception, especially when the text aligns with existing biases or expectations. So, how do we fight back against this threat?

Deepfake text detection: Challenges and defenses

Detecting deepfake text is becoming one of the most complex problems in the fight against generative fraud. As large language models get better at writing like humans, it’s increasingly difficult to tell when something was written by a person—or by a machine pretending to be one. In fact, one study has shown that human performance in detecting deepfakes peaks at ~57% accuracy.

Researchers and companies are racing to close that gap. Academic groups have developed systems like MAGE: Machine-generated Text Detection in the Wild, which assess whether detectors can accurately identify AI-generated writing across various styles and topics. Their research, however, shows that even top detectors only achieve ~84% accuracy. Others, such as AuthGuard, focus on spotting logical inconsistencies or unnatural patterns that reveal when an AI model, rather than a person, crafted the text.

Meanwhile, industry teams are exploring “stylometric” approaches, or essentially digital indicators of how individuals write, to flag text that doesn’t fit a person’s usual voice. For example, ChatGPT is notorious for overusing the “–”. However, these clues aren’t usually sufficient to determine the difference between human and machine-generated text.

Pindrop is building upon this work, researching and testing new approaches to deepfake text detection.

Laying the groundwork

We started by collecting a diverse dataset of examples comprising both human-written and machine-generated texts. These datasets help researchers understand how language models behave and what distinguishes their writing from that of humans. We tested an array of publicly available detection systems using this curated dataset. We trained some specifically to recognize AI-generated text, while others relied on statistical patterns in language to estimate whether a piece of writing came from a person or a machine.

Building our test model

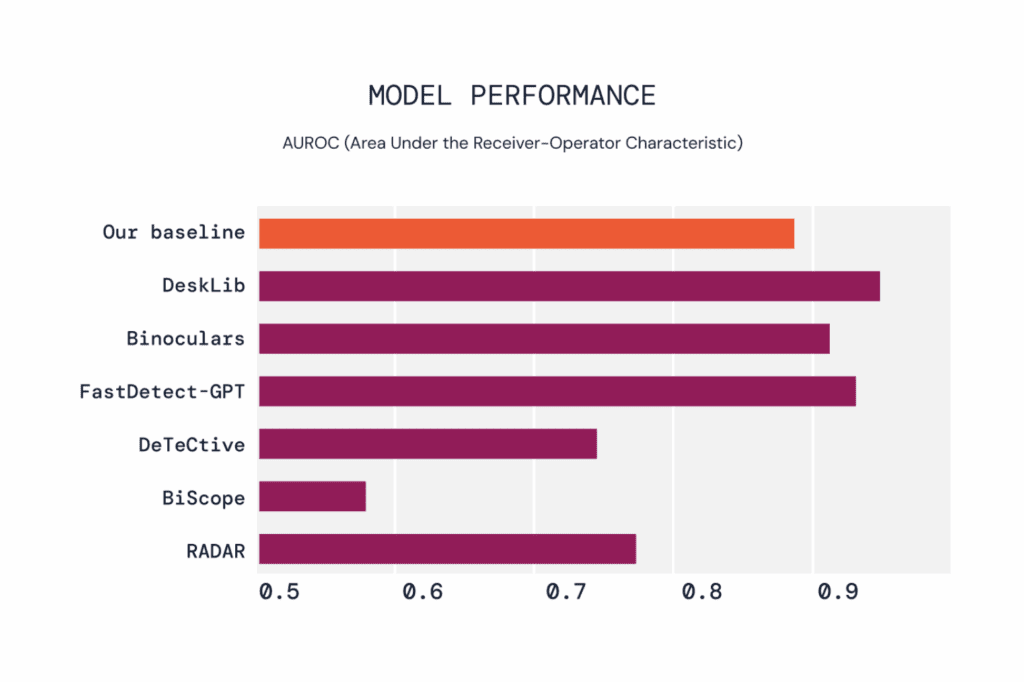

With a clearer picture of the data and existing models, we trained our own internal research models to identify textual deepfakes. Unlike many public models, our goal isn’t just to spot AI-generated writing but to identify cases where generative text is used maliciously to deceive or manipulate. We developed a research model utilizing modern transformer architecture to address our unique situation and trained it on a combination of targeted, relevant data. We then benchmarked its performance against leading publicly available models.

The initial test model performs strongly, surpassing most available models. This performance is extremely promising, but the variety of deepfake attacks is ever-growing.

The real test is adaptability: how quickly can we adapt the model to new attack styles without sacrificing accuracy?

Adapting to evolving attacks

The real strength of our research model is its flexibility. We can rapidly adapt to new generative attacks because we have complete control of the architecture, data, and predictive capabilities.

Consider a simple example: an attacker uses AI-generated text to bypass spam filters designed to detect machine-written content. When that fails, they pivot. Instead of generating plain text, they instruct the model to mimic a different style: “Write this text as if you were a young person, using youthful slang.” The message stays the same, but the altered tone helps it slip past defenses. Even simple tricks, like adding invisible spaces, inserting typos, and replacing words with synonyms, can effectively fool detectors.

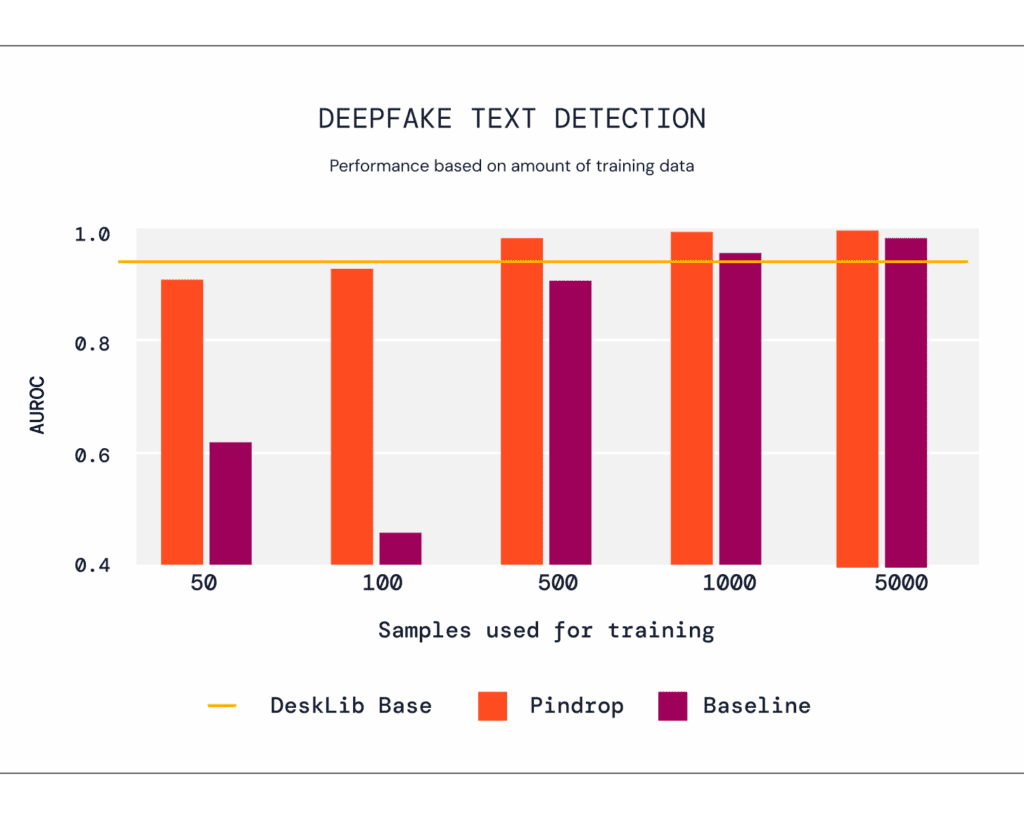

To combat these tactics, we tested our research model on a new, unseen dataset composed of evasive variations. By systematically feeding our test model small amounts of data and evaluating its performance, we measured how quickly it could adapt to entirely new types of attacks.

The best-performing public model remains strong, even under attack, correctly identifying approximately 94% of samples. However, with only a few hundred samples, the Pindrop research model outperforms that benchmark, eventually reaching near-perfect accuracy. It also adapts to new attack types faster than a standard baseline model.

These results highlight how even simple manipulations are surprisingly effective at fooling generative text detection systems. Research from projects like RAID, TOCSIN, and GREATER has shown that adding noise, rephrasing sentences, or changing writing style can significantly reduce detector accuracy. Our approach is intended to address that problem directly. By exposing the research model to just a handful of new examples, it can rapidly recalibrate and perform across entirely new attacks, datasets, and model families.

Defending against the next wave of deepfakes

Audio and video deepfakes have already reshaped the fraud landscape, and Pindrop technology has led the way in defending against them. As generative AI expands into text and other modalities, the threat surface is growing faster than ever. The Pindrop research team’s work in deepfake text detection positions Pindrop at the bleeding edge of combating new threats as they emerge.

To learn more about our deepfake detection products, click here.