Article

The $40B Deepfake Crisis: 3 Lessons Every Enterprise Leader Needs to Hear

Adriana Gil Miner

CMO • November 21, 2025 (UPDATED ON November 21, 2025)

5 minutes read time

Fraud has entered the meeting room

As enterprises move their most sensitive interactions—financial, operational, and strategic—into online meetings, they’re relying on virtual spaces that were never designed to withstand AI-generated threats.

At Pindrop, we analyzed 1.2 billion calls last year and found that 1 in every 599 contact center calls was fraudulent, double the rate from just four years ago. Those same fraud patterns don’t stay contained; they follow the channels where sensitive interactions, such as hiring and money movement, occur.

Not long ago, sensitive discussions held over video or voice platforms were relatively secure—protected by encryption, authentication protocols, and built-in trust of what you could see. Attackers needed access, patience, and technical skill to infiltrate or manipulate those interactions.

GenAI has destroyed those barriers. With convincing AI-generated impersonations, fraudsters can infiltrate these conversations with ease, posing as someone you know and trust.

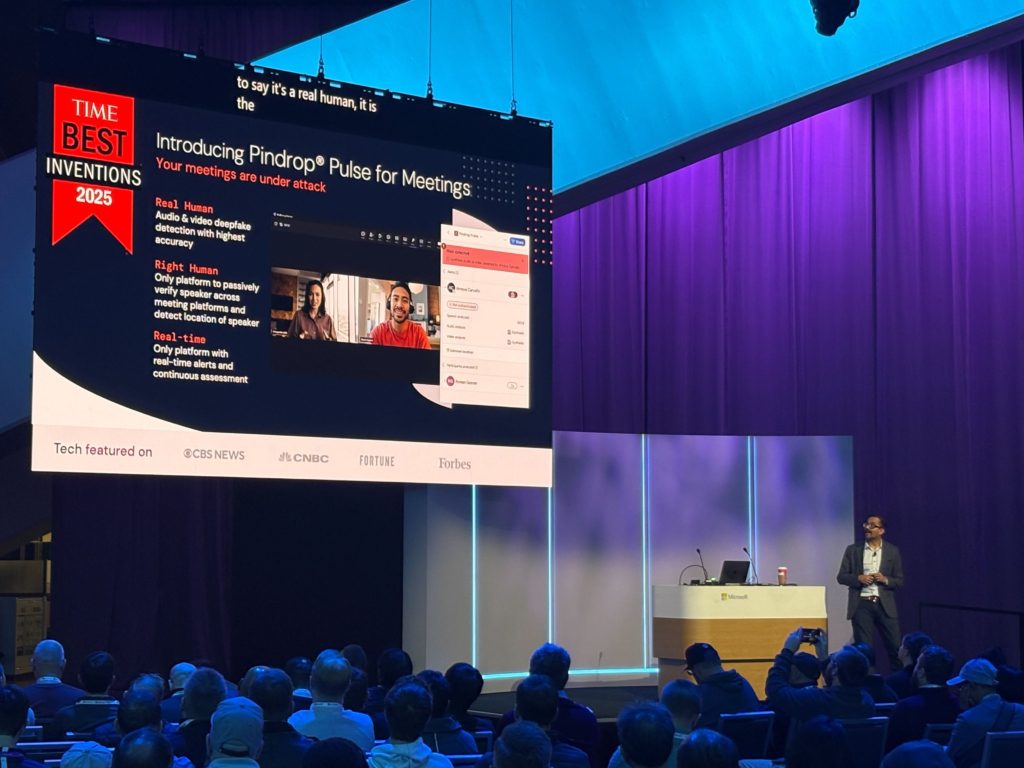

At Microsoft Ignite 2025, we spoke with enterprise leaders about the one question that sits at the center of today’s security strategy: how do we protect trust in these remote interactions?

Here are the three takeaways from that discussion, the realities we’re seeing across industries, and what organizations need to do next.

1. Human impersonation still reigns supreme

Across our network, 98% of all fraud detected in contact centers is human impersonation, while 2% now involves AI-generated impersonation. We’re seeing the same pattern inside virtual meetings, where fraud is still overwhelmingly driven by people posing as others, leveraging social engineering, VPNs or proxies to pretend to be in a location they’re not, or simply having a different person stand in for them.

In HR workflows, this human element of deception is especially visible. Based on internal Pindrop hiring data, 41% of fraudulent applicants appear to be U.S.-based candidates, but they originate from non-U.S. IP addresses, even when companies are hiring exclusively within the United States. Even more concerning, 6-8% of candidates who advance to a second-round technical interview are engaged in proxy fraud, where someone else attends the interview in their place.

And the truth is, humans are bad at spotting AI. A recent study found that people can identify AI-generated content only about 50% of the time—no better than a coin toss. At a time when deepfakes can be made in minutes, human detection failure isn’t a flaw—it’s a massive security gap.

Listen to how bots sound on real-life calls:

At Ignite, our goal was to engage with enterprises that need systems that can distinguish the right person from the wrong one—in real-time and across every channel.

2. AI has supercharged impersonation

At Pindrop, our analysis revealed a 756% year-over-year increase in deepfake or replayed voices across enterprise phone calls, indicating that what began as isolated experimentation has become widespread.

That growth reflects a simple truth: as AI tools become more realistic and accessible, they lower the barrier for impersonation. And worse, what began in the contact center is no longer contained there.

Fraud follows the money. As sensitive transactions and approvals move into video conferencing, the threat has followed suit.

We’re now seeing this play out across remote hiring. Roughly 1 in 4 North Korean IT workers applying for remote jobs are using deepfakes to obscure their true identity, or possibly to resemble the individual whose government-issued ID they’ve stolen. Whatever the motive, the outcome is the same: audio and video deepfakes are being used to conceal identity and bypass verification inside enterprise workflows.

Deepfakes aren’t coming; they’re here, and they’re reshaping how organizations think about digital trust.

3. Trust requires team defense

Deepfake and impersonation fraud aren’t isolated security incidents—they’re cross-functional risks. They touch IT, security, HR, finance, and helpdesk operations. Preserving digital trust now requires these groups to collaborate, rather than operate in silos. That means aligning on three operational imperatives:

Secure the channel

Every meeting must undergo the same level of scrutiny as a sensitive transaction occurring on other channels (e.g., voice, digital, mobile, etc.).

Surface real-time alerts

If something appears synthetic, the individuals responsible for the sensitive transaction should be instantly aware (e.g., hiring managers, help desk, etc.).

Integrate with security workflows

Detections shouldn’t reside in dashboards; they should trigger automatic responses and notifications.

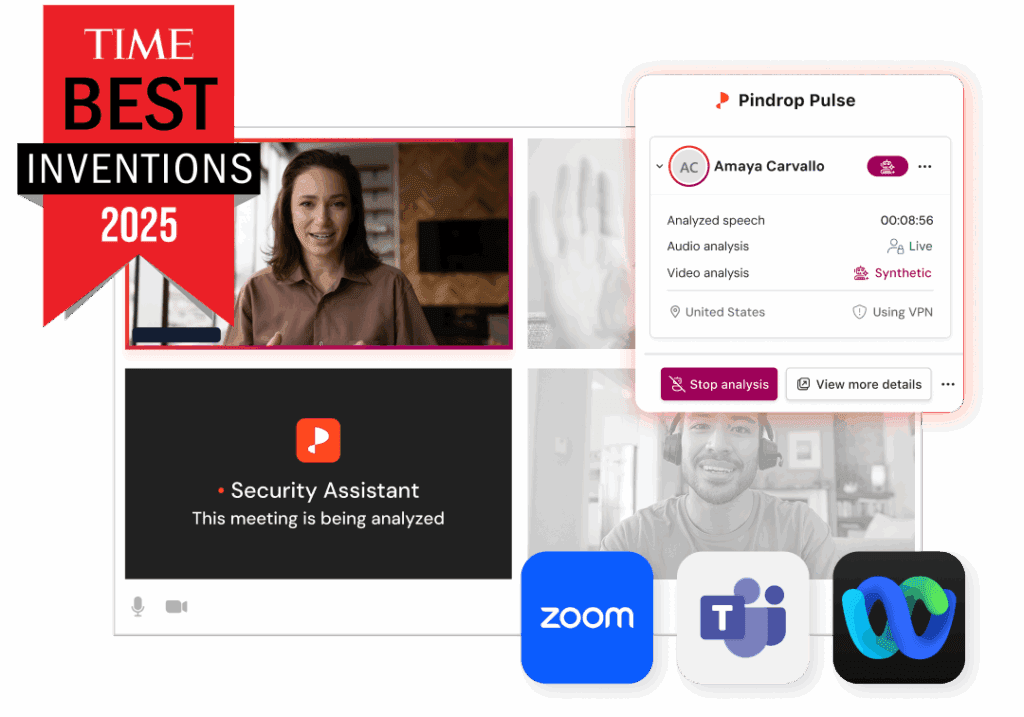

That’s why we built Pindrop® Pulse for Meetings—to give enterprises real-time visibility into whether the host of a meeting is talking to a real person and the right person in their meetings.

Looking forward: You can’t secure what you can’t trust

AI-driven deception is accelerating—and most companies are still playing catch-up.

Without immediate investment in deepfake defense and participant authentication, enterprises are leaving the door wide open to GenAI fraud.

The time to act is now.