One meeting could cost you millions.

Deepfake candidates in virtual interviews

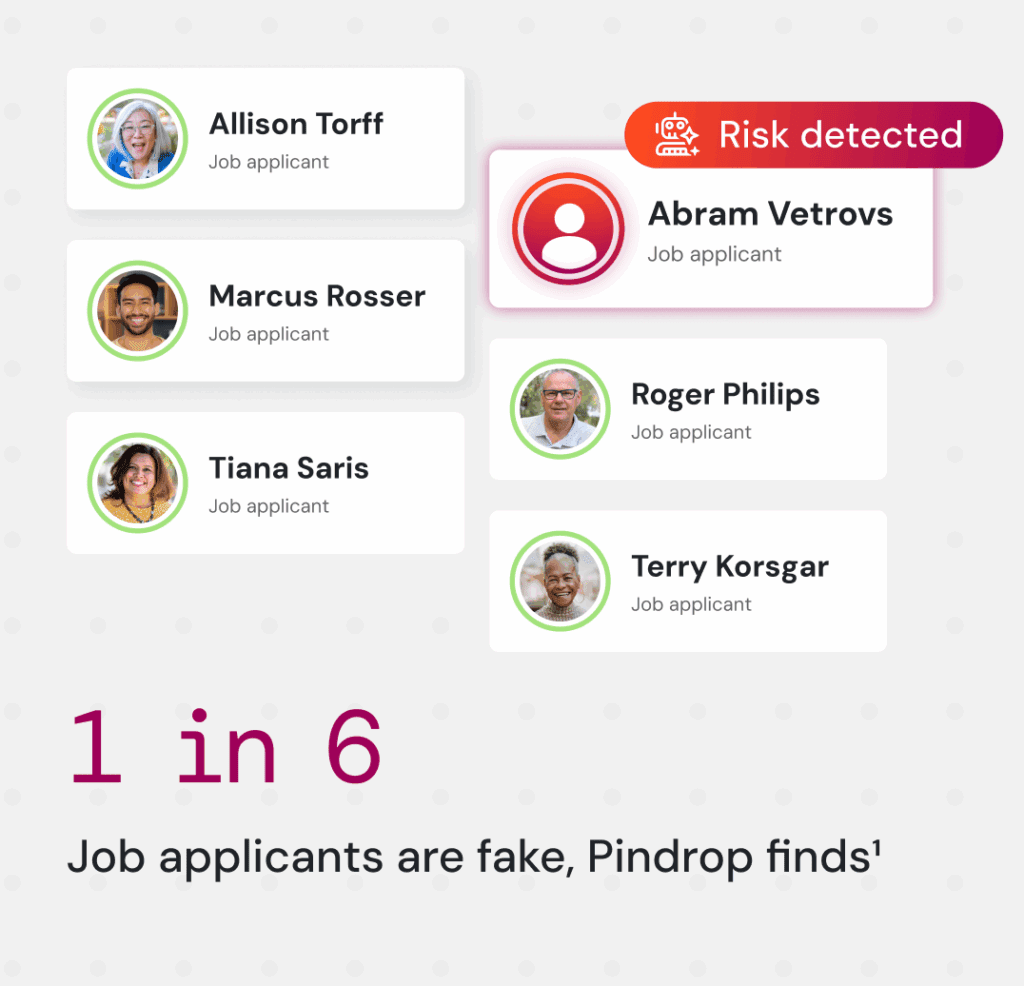

Recruiters are now facing a new wave of synthetic applicants — fake candidates using AI-generated faces, voices, or even live proxies to impersonate real professionals. In Pindrop’s own data, 1 in 6 applicants showed signs of fraud, and 1 in 4 DPRK-linked candidates used a deepfake during live interviews. Pulse verifies both the “real human” and “right human,” authenticating voices and detecting face swaps before a bad hire compromises your systems.

Fraudulent authorizations

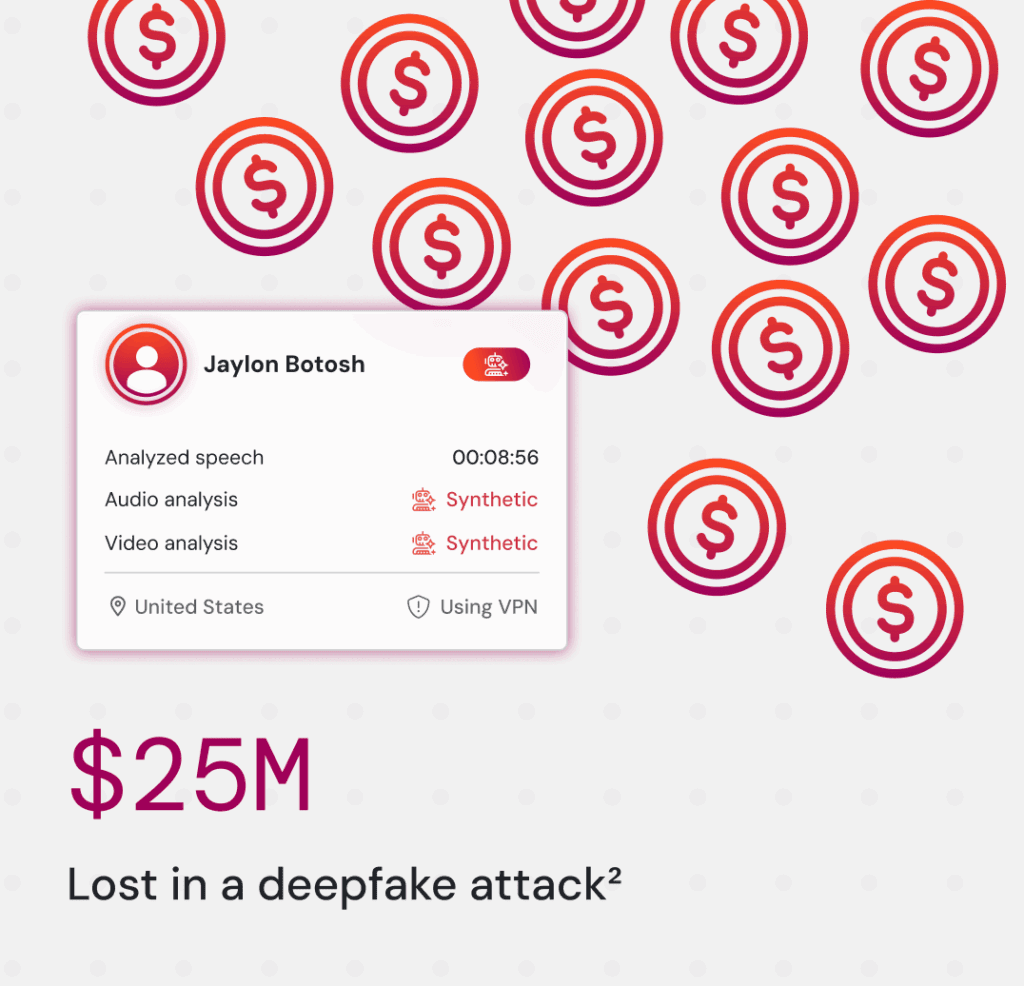

Deepfakes have already triggered multimillion-dollar corporate losses — like a finance worker who wired $25 million after attending a video meeting with deepfake versions of his CFO and team. Pulse instantly analyzes speech and video in real time to identify impersonation attempts, ensuring that every decision made in a call comes from the right person.

Fake employees and insider threats

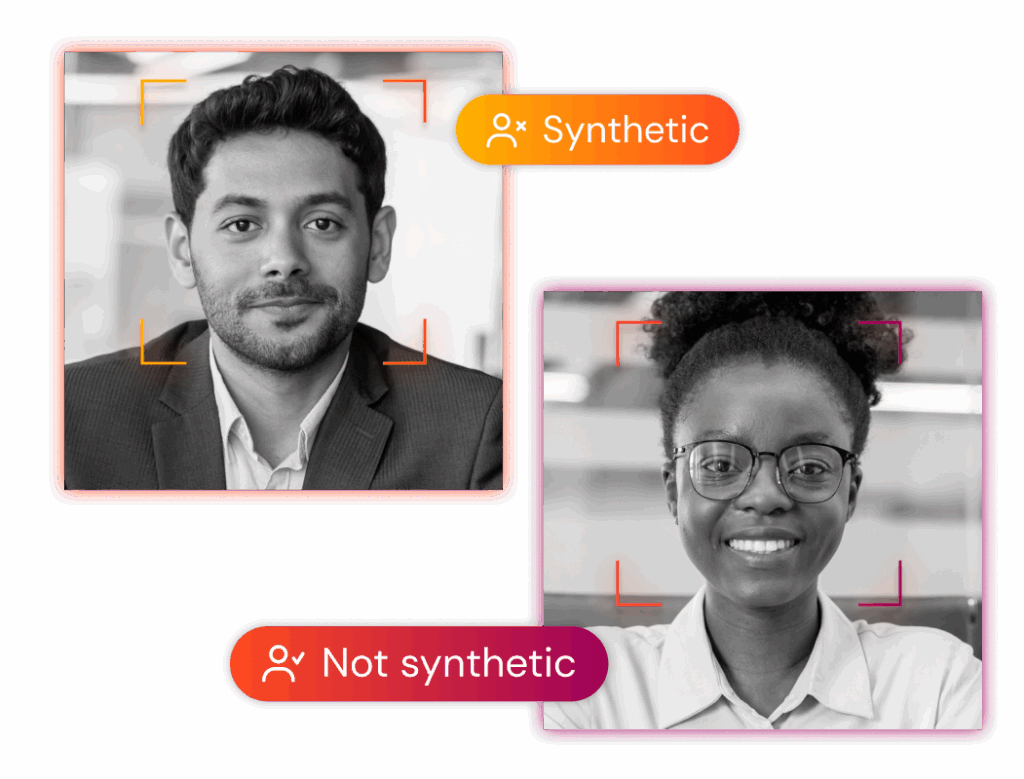

Nation-state actors have begun using synthetic identities to infiltrate corporate meetings, seeking access to IP or internal strategy sessions. Pindrop’s research shows 1 in 343 fake applicants were linked to North Korea, with many using manipulated video or VPNs to mask their true location. Pulse flags these anomalies through continuous location intelligence, alerting teams when a participant’s signal doesn’t match their claimed geography.

From job interview to cyber breach: What Pindrop data reveals

Pindrop found 1 in 343 fake applicants had IP addresses linked to DPRK proxy infrastructure.

Ready to defend your meetings?

1Based on analysis of applicants to Pindrop’s own fully remote roles, Why Your Hiring Process is Now a Cybersecurity Vulnerability, June 2025

2 CNN, “British engineering giant Arup revealed as $25 million deepfake scam victim,” May 2024

3 Pindrop, Why Your Hiring Process is Now a Cybersecurity Vulnerability, June 2025